Rendering & Animation

The following list is a selection of rendering and animation tasks, in no

particular order, I completed while working at Mimic Technologies, Inc.

List items with active links will take you directly to additional

sample material on this page showing intermediate or final results.

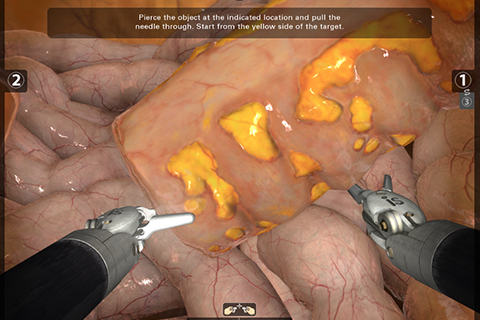

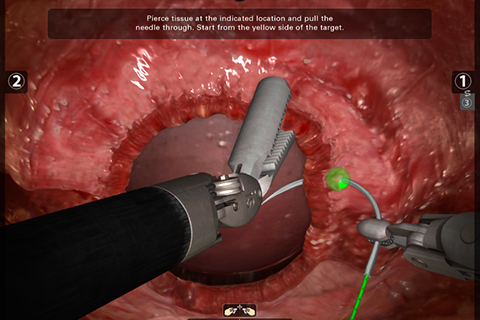

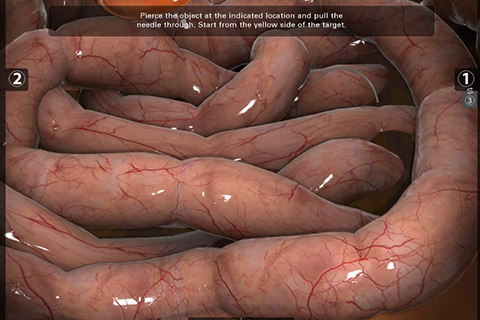

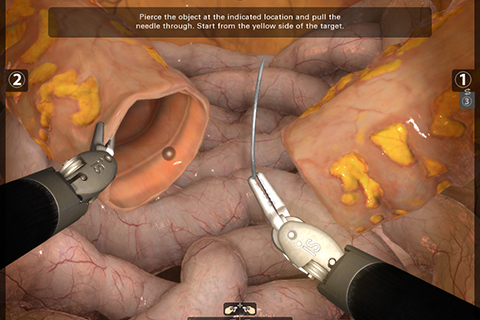

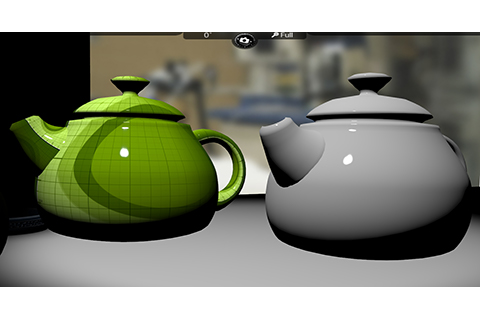

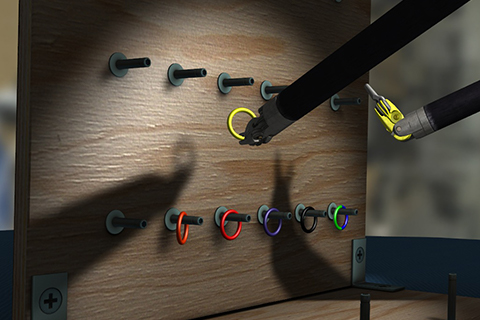

Physically-based Rendering

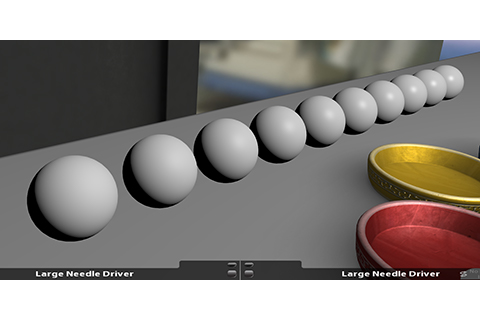

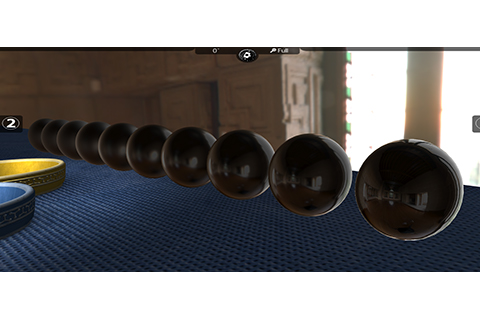

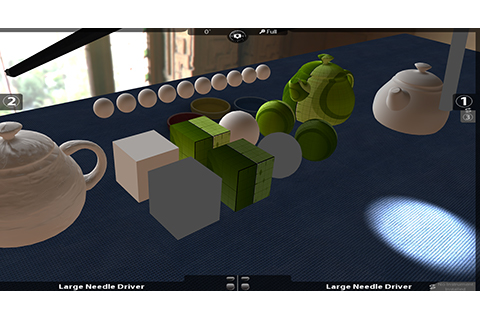

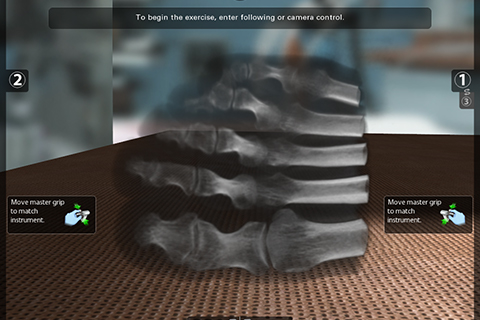

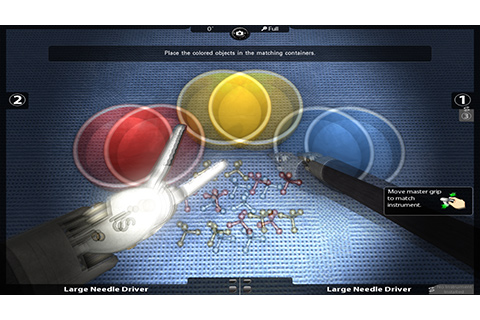

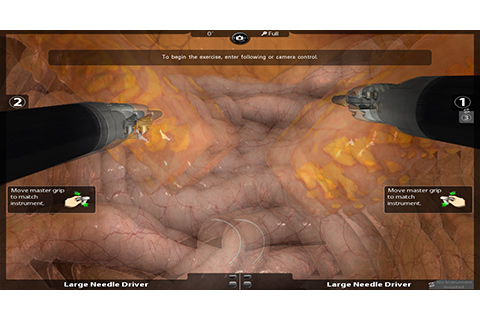

I designed a physically-based lighting and shading model for the simulator. The following section of images shows some final results:

The next set of images demonstrate various components of the lighting model:

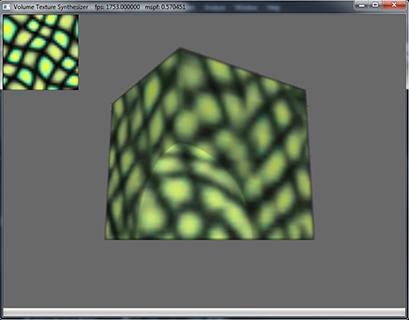

Volume Rendering

The following video shows the integration of a volume renderer with a forward renderer in a prototyping environment. The second image is a screenshot of a volumetric texture synthesis tool I developed. The tool procedurally generates a volume texture based on a given 2D example texture. Notice how the image pattern remains consistent no matter how you slice through the volume.

Passive / Interlaced 3D Stereo / Active 3D Stereo

The next set of images requires a 1080p passive 3D monitor and matching 3D glasses in order to perceive the stereo effect. The image must also be displayed in full-screen mode to align the image with the monitor's interlacing. I developed this form of 3D stereo to allow viewing the simulation in stereo when only a monitor and no stereoscope was available.

I also developed an active 3D stereo system for viewing the simulation on an active 3D display with an NVIDIA 3D vision setup. For this implementation, I developed a quad-buffered stereo renderer.

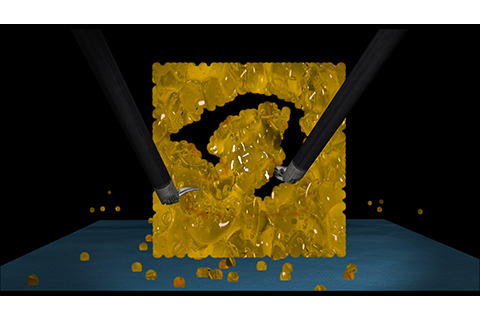

Rendering for Position Based Dynamics

I developed rendering support for a Position Based Dynamics (PBD) physics system. Three methods of visualizing the PBD objects included billboard rendering, screen-space particle surface rendering, and mesh generation. The following video demonstrates an early prototype of dissection using the billboard rendering method.

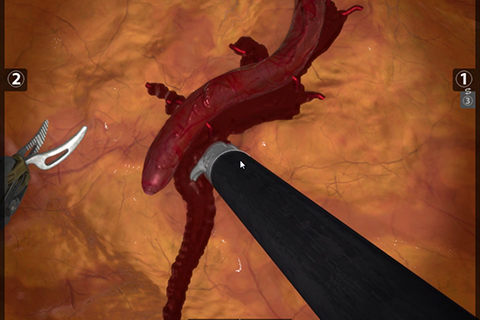

Screen-Space Particle Fluid Rendering

The next video shows a physics-based particle system representing blood. A screen-space rendering technique is used to render the blood as a continuous surface. Notice how the surgical instrument can interact with and displace the blood, and the blood flows around the jaws of the instrument.

Exponential Variance Shadow Mapping

For the shadows in the simulator, I implemented Exponential Variance Shadow Maps (EVSM). A few screenshots can be seen below. Also any video on this site showing the simulation can be used to view the EVSM shadows.